a.k.a. MQTT-RF Bridge for Ceiling-Projecting Alarm Clock Temperature

By Greg J. Badros <badros@gmail.com>

First draft 2020–03–29; last updated 2020–04–08; moved to blogger 2021-02-14

Update: If you or someone you love has diabetes, checkout out Gluroo! It's an iOS and Android mobile app that makes managing diabetes as easy as messaging.

Motivation

In February 2020, my son was diagnosed with Type 1 Diabetes. Within 4 days we had him wearing a Dexcom G6 Continuous Glucose Monitor (CGM). My wife and I each follow his CGM readings 24/7, but at night time, our primary user interface to information is a ceiling projection alarm clock. I decided to find a way to project our son’s real-time blood glucose readings unobtrusively on the ceiling of our bedroom.

First approach — Build a simple ceiling projector

At first I tried to find a ceiling projection multi-segment LCD component of some kind. Though I was hopeful, I soon became discouraged and was unable to find something easy to use in a project. I found various tutorials about making a simple projector, but the part list and the required experimentation around brightness, etc., and having to package it up to be something I’d be willing to put on the nightstand made for another challenge and would add to the cost and complexity.

Second approach — Use a Ceiling-Projecting Alarm Clock

After becoming more pessimistic on the first idea, I had an insight that I could re-use an existing Ceiling-Projecting Alarm Clock and just connect in to the display or replace the display with my logic. Then I realized it was even easier than that: many ceiling-projecting alarm clocks have the ability to display a temperature from a remote module that is meant to sense the outdoor temperature. If I could reverse-engineer that outdoor-thermometer + temperature transmitter, I could transmit whatever number I was as the temperature and the ceiling projecting alarm clock would show that number of interest on the ceiling. So “all” I had to do was find a ceiling-projecting alarm clock that had three digits of temperature (e.g., 24.3 degC), reverse-engineer the outdoor thermometer module and protocol, and create a new transmitter to send a different “temperature” to the alarm clock. Here’s the end result:

Selecting the Alarm Clock

I already owned and like a circa 2005 Oregon Scientific projection clock (Model BAR338PA), but the outdoor temperature sensor/transmitter had died long ago. I searched for a replacement with no luck but found that any of models THC138, THR138, RT918 were supposedly compatible with our projection clock. I’d still love to reverse engineer those transmitters so it’d work with that clock, but for the immediate term, I needed another projection clock.

After a quick look through options on Amazon, I decided to try the Smartro Time & Temperature Projection Alarm Clock — it’s pretty basic, but has a small footprint, displays temperatures with 3 digits in equal size (on the projected view) and has a separate transmitter. I bought one to try.

Reverse Engineering the RF Protocol

I’d previously identified URH (Universal Radio Hacker) as a software tool to help in reverse engineering, and from its device compatibility list, chose the tiny and inexpensive NooElec NESDR Nano2+ software-defined-radio (SDR) receiver to listen in to the RF transmissions.

I made a couple of assumptions before learning how to use URH:

- The projection clock devices are made in China, so I suspected that the temperature would be transmitted in Celsius/Centigrade (not Fahrenheit).

- These devices are inexpensive so I suspected that they’d probably send the temperatures as an integer with the decimal point shifted and not bother with floating point representations. E.g., 23.9 degC will be sent as the integer 239.

- From the user manual and FCC registration of the device, it was using the common 433.92MHz frequency for radio communications.

So I fired up URH with the SDR in the USB port and started the spectrum analyzer looking at (and listening to using CubicSDR which the Nooelec device suggested — it has a good spectrum analyzer that lets you play through your speakers the “heard” RF signals) around 433.9MHz. Sure enough, I saw the RF antenna receive a blurt of data every 55 seconds or so at 433.92MHz. Just after each transmission, I saw the clock update the displayed outdoor temperature. The transmissions stopped when I removed the batteries to the transmitter, so I was confident I was seeing the right signal, and there were three bursts immediately after inserting the batteries, providing further confirmation.

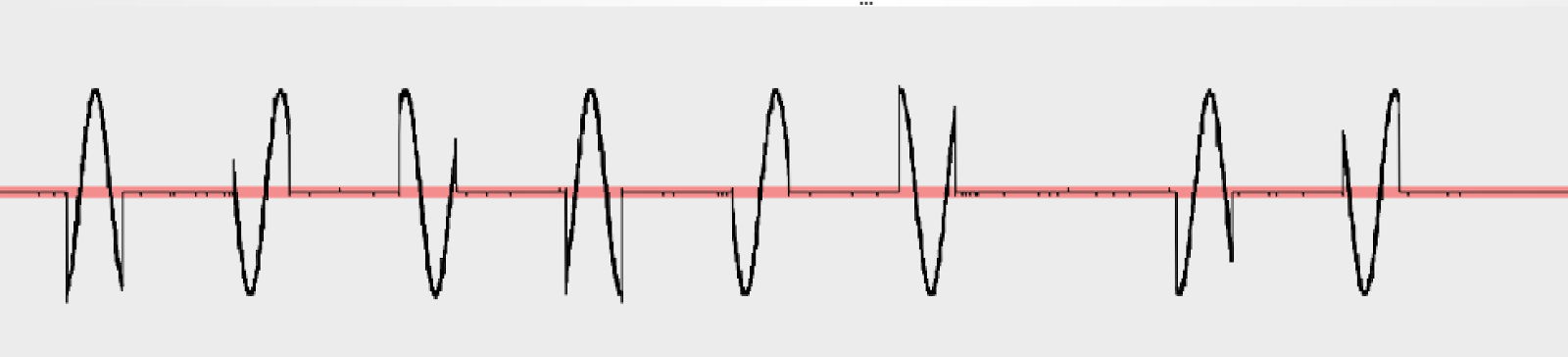

The raw RF signal looked like this in URH when the temperature was 25.8 degC:

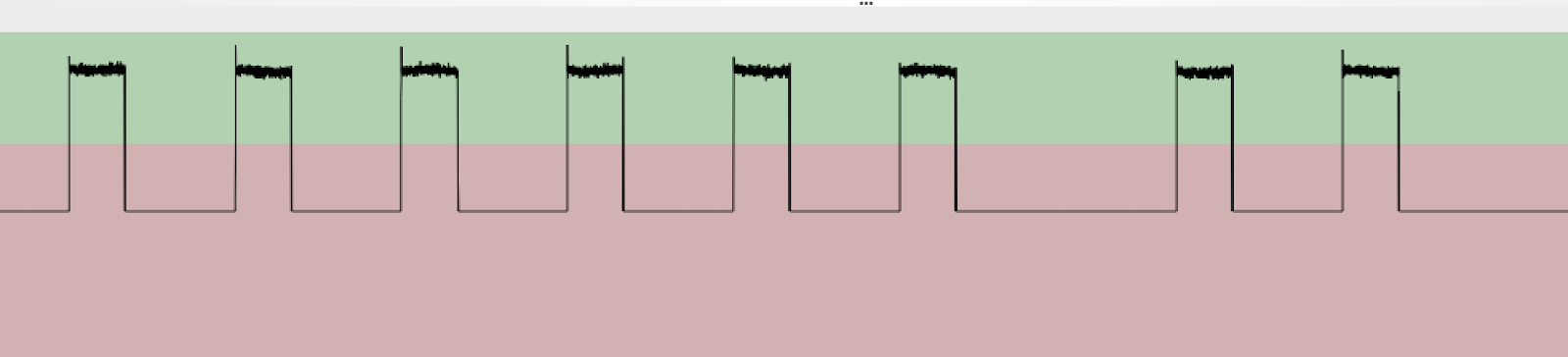

You can clearly see a modulated sine carrier wave, and it’s also clear that it’s amplitude (not frequency) modulation — technically this is called OOK (On-Off Keying) — so I applied URH’s ASK demodulation and got a waveform showing a sequence of bits corresponding to the RF chatter. I used URH’s “autodetect parameters” to help find the red/green 0/1 split point, and the time between peaks of 500 microseconds:

From this URH gives me the following bits:

10010010010010010000100100001000010010010010010010010000100100100100100100100001001000010000100001000010000100001001000010000100001000010000101000000001001001001001001000010010000100001001001001001001001000010010010010010010…

It’s immediately obvious that there are lots more 0s in this representation than 1s, thus suggesting that these aren’t the logically-important bits in the transmission and that there’s some other decoding to be done. At this point I recorded another handful of different transmissions at different temperatures. In particular, I put the transmitter in the freezer for 15 minutes to get a much different temperature reading. Here are the bits sent for 2.4 degC:

1001001001001001000010010000100001001001001001001001001001001001000010000100100100100001000010000100001001001001000010000100100100001010000000010010010010010010000100100001000010010010010010010010010010010010000100001001001…

And then I converted these two temperatures to binary using the assumptions I made upfront:

25.8 degC = 258 = 0b100000010

2.4 degC = 24 = 0b11000

I did those conversions to know what the binary sequence was that I was looking for in each of the two demodulated sequences of bits (presumably after another decoding step). (I had 7–8 more transmission waveforms at other temperatures but I really focused on just those two to keep the scale manageable.)

My first idea for further decoding of these raw transmitted bits was based on grouping them into triplets of 3 bits. I did a histogram after splitting these up into 3 bits and got:

Count: Pattern

152: 010

148: 001

147: 100

126: 000

4: 101

On the one hand, this was encouraging to see a relatively even split among 010, 001, and 100, with 000 (quiet on the RF) being an outlier, the 4 occurrences of 101 were weird and given the proximity of the transmitter to the snooping antenna, I discounted the possibility they were errors or noise. I also wasn’t quite clear how to think about this since one has to expect the RF communication would be designed to be robust against chopping off the beginning or end of the communication and thinking about the encoding in terms of % 3 = 0 offsets from some “start” position seemed fragile. But that’s where I quit for the day.

The next day I decided to go back to the demodulated wave form and look at it some more. That’s when I had my key insight: the peaks were just separating two differently-lengthed periods of quiet: either 1000 microseconds (1ms or 2 bits) or 2000 microseconds (2ms or 4 bits). Maybe, I thought, interpreting the shorter quiet periods as “0” and the longer ones as “1” would result in a higher-level representation of the intended transmission. I.e., in the bit sequence, “00” between two “1”s is a “0” and “0000” between two “1”s is a “1”. I wrote a quick perl script to do that conversion and got the below for 25.8 degC:

RAW:

10010010010010010000100100001000010010010010010010010000100100100100100100100001001000010000100001000010000100001001000010000100001000010000101000000001

(bold marks “00”s which are interpreted as one “0”; italics “0000”s are interpreted as one “1”)

00000101 1000000100 00001011111101111110..

And I bolded the binary for 258 (remember 25.8 degC) in the above decoding — it showed up! I repeated on the other readings and each time I found the temperature’s binary representation inside the decoded RF transmission. I did some further experimentation to confirm that negative temperatures are, as you’d guess, encoded with 2s complement. And the signal repeats itself about 10 times per transmission, each time separated by a 6 “0” quiet period (3ms).

Across multiple different temperature readings, the prefix to the temperature bits was consistent, but the suffix varied. The same temperature seemed to give the same suffix, but I could not decipher how to compute the suffix from the temperature, so I decided to ignore that puzzle for a bit and see if it mattered.

Next up, I needed to see if I could use my understanding of the protocol to synthesize an RF transmission that would be interpreted by the clock as coming from the temperature transmitter.

Transmitting Reverse-Engineered Protocol

Next up I needed a transmitter for testing the sending of these signals. My friend recommended a YardStickOne which probably would’ve sufficed both for receiving and transmitting, but I hedge a bit and only bought a receiver at first since I wasn’t sure that I’d be able to decode the protocol and figured I might just proceed to the next step (of using a small IC transmitter and microcontroller) and skip this step of prototyping the transmission of the signal from my laptop entirely. In parallel, I ordered some Arduino-compatible 433 MHz transmitters and receivers and as it turns out, those arrived first, so I skipped the step of using the YardStickOne to test transmission entirely, and proceeded to just code up the sketch for the Particle Photon.

Designing the BGL Interface to the Transmitter

I wanted to RF transmit the BGL from a Dexcom G6, and there’s plenty of open-source code out there (e.g., NightScout) for being a Dexcom Follower to get the value from the CGM through the Internet. So I wrote a tiny script that fetches the BGL level and publishes it as a message on an MQTT bus that I already have running for my smart home. I wrapped that up as a docker container so it stays running, and that simplified my microcontroller device to only have to RF transmit MQTT messages that it receives (rather than having to do HTTPS queries — an HTTP query on Particle Photon is pretty trivial but the SSL handshake adds significant complexity to an otherwise simple sketch).

Thus, the design for the microcontroller-based component is simple: it listens to a pre-specified MQTT channel for a message that is simply and integer and it broadcasts that integer via the connected RF radio.

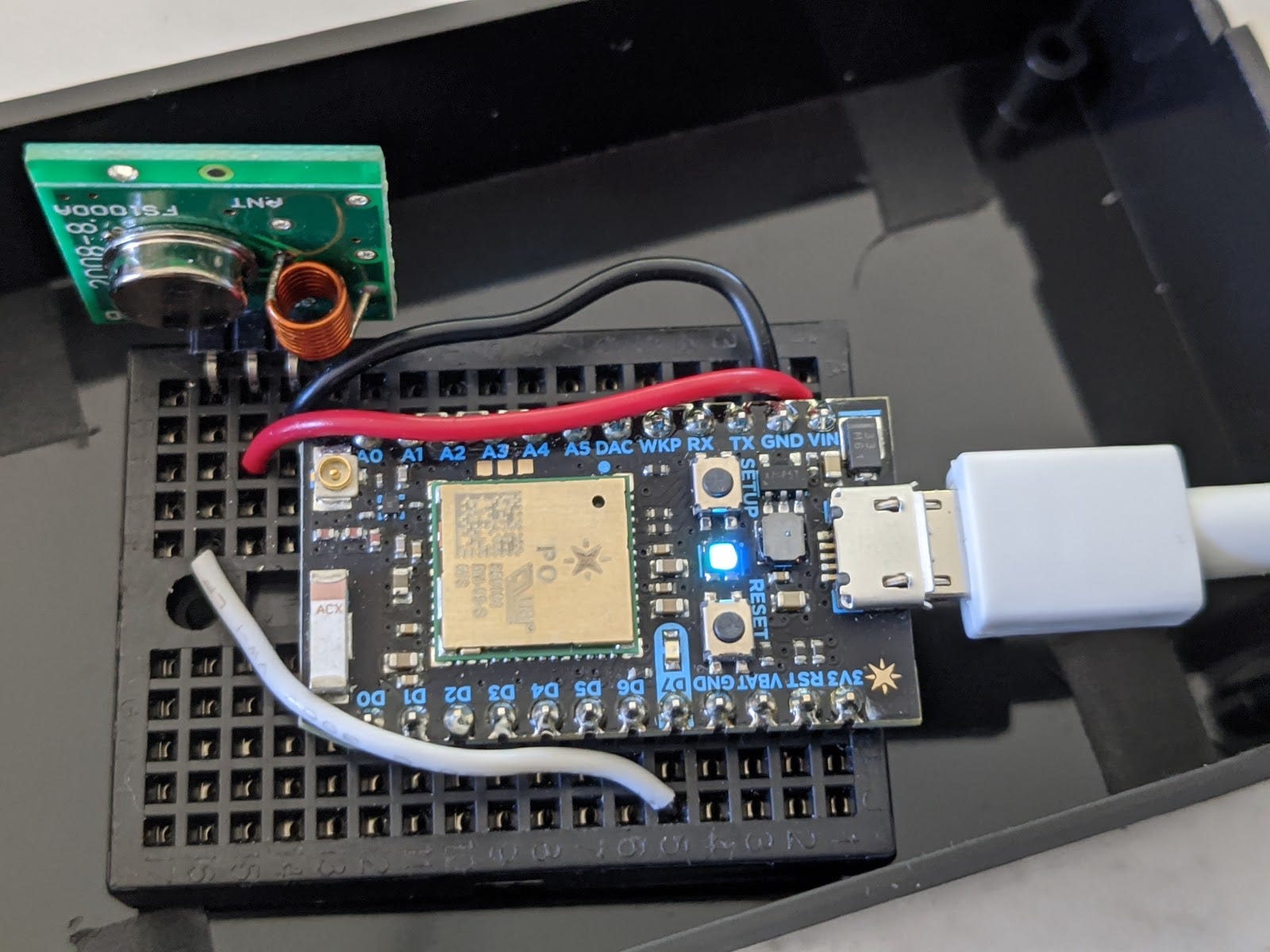

Building the MQTT-RF Bridge Device

My go-to microcontroller of choice is the Particle Photon and this project was no different. I used just four parts. Here’s the BOM (Bill of Materials):

- Particle photon microcontroller

- Aukru 3X 433MHz RF Wireless Transmitter and Receiver Module Kit

- micro breadboard

- plastic case

- USB power supply and cable

The transmitter is low power enough it can be powered by the photon itself, the connections are simple:

The sketch will (TODO) soon be available on github. Originally, I planned to use the RadioHead library, but it wasn’t immediately trivial to make that work with the Particle Photo microcontroller, so I decided to try just driving the RF transmitter directly by driving the D7 pin, and that worked (in the sense that I could see the transmissions on my NooElec RF snooper).

At this point, I was debugging by comparing the demodulated waveforms of the same temperature as transmitted by the stock outside thermometer transmitter vs. my homebrewed device. There were a couple of small tweaks to the analog signal — e.g., between the repetitions of the temperature with its padding, the signal is actually an exception to the short/long pause for 0/1 rule: it is low for 500 microseconds, then high for 500, then low again for 4ms. I still wasn’t sure if this would work with the clock, but making the waveforms look the same when snooped seemed likely to drive the receiver in the projection clock.

Finally, I tested with the clock and had some trouble getting consistent results. I was always able to get the transmitted reading to show up when I put the clock in “synchronize” mode (by holding the “+” button on the clock for 3 seconds), but it would stop working some time later. I spent a bunch more time believing that maybe those extra bits were checksums of some sort (but they weren’t an easy binary function and I doubted that they bothered with a lookup table to compute the checksum) or a sequence number (but they didn’t appear to be monotonically increasing.

After some further experimentation, it turns out that the projection clock is actually only listening for the radio transmission exactly every 57 seconds or so! With that realization, I updated my MQTT publishing script to publish exactly every 57 seconds. Although that worked better, the timing wasn’t reliable and really it’s a property of the the connection between the RF transmitter and the projection clock, so it’s a better design to have the RF transmitter Particle photon sketch be responsible for managing the 57 second timing itself. I reworked the sketch to remember the MQTT messages but instead of doing the RF transmission in the callback for a message, just do the RF transmission of the last-received message exactly every 57 seconds. That works reliably… done!

Improvements

It would sure be nice to know what those extra bits in the RF transmission message are for, but ultimately they don’t seem to make a difference. I also wish the projection clock only projected the outdoor temperature instead of alternating between the Inside temperature and the outside temperature. I may choose to repeat the process with a different clock radio after researching the options even more carefully.

The fact that the indoor and outdoor temperatures alternate is even worse when projecting in celsius, which is how I started using the clock: 19.7 degC is a reasonable temperature for indoors, and 197 is also a reasonable (though high) blood glucose level. Thus, I switched the code to convert the incoming MQTT message BGL to a centigrade temperature before sending the number to the clock so that it when it converts it to Fahrenheit it shows correctly. Unfortunately, this is a slightly lossy conversion: e.g., if the BGL is 128, we can tell the clock it’s -10.7 degC (12.7 degF for 127) or -10.6 degC (12.9 degF or 129) but we can’t actually get the clock to show 128. This isn’t that big of a deal in practice since a single digit difference in BGL isn’t important, but it’s a little annoying.

Source Code

See https://github.com/gjbadros/particle-photon-mqtt-rf-bridge.git